On Monday morning, I began the week by going to the studio (as per usual), feeling numb and very unhappy. I imported the wind environment files that George had sent the prior day, sizing and placing it around Gloria, but one of the files that he sent over was a .max file, unreadable by my laptop. George continued to send over various versions, but each one failed to import in some way. At this point, Sid came into the studio and spoke to me about what he was planning on working on. When he said that he’d be working on the ripple effect for instruments played in the overworld, we determined that I’d handle colouring them through code (when I get to that), though he’d see what he could do if I were to run into any issues. Then, George sent over a package for his scene for the wind area, and when I imported it, I saw that colliders had been placed as barriers on the sides of platforms, but the planks of bridges still had individual colliders. This wouldn’t work well with the movement system, so I asked George if he could handle the colliders differently, instead creating a separate mesh in 3ds Max. While programming code to gradually change the camera position, I started feeling incredibly unhappy, and I left the studio for the campus’ “quiet room”.

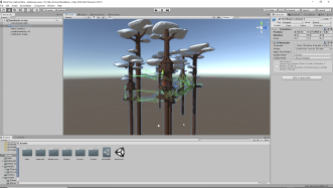

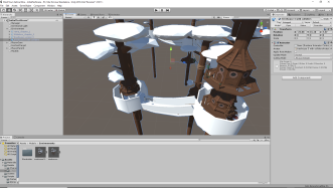

After being incapable of working for a while, I imported George’s new version of the environment (with separate models for colliders), placed and scaled it, and disabled the mesh renderers on the designated collider objects, instead adding mesh colliders to them. When testing the new colliders, I noticed that movement up slopes was quite jerky, so I removed the code for calculating vertical velocity when moving upwards (instead setting it to zero) and turned the character controller’s angle allowance from 0° to 45°, which made things much smoother. I then positioned the cuboid representing the flautist within the new environment, briefly tested that everything was working and sent a build of the game to the team for them to use in the afternoon’s scheduled testing session. After George told me that he and Ella found the wave mechanic too difficult, I halved its speed, doubled its size and made a new build, which I sent to them. After I sent that over, George said to prioritise having a camera that dynamically swings around trees, so to get his opinions on where the camera should be placed in-person, I headed back to the studio. After going back, my mental state plummeted, and I was once again incapable of working for a fair amount of time (though I won’t go into details), eventually heading home.

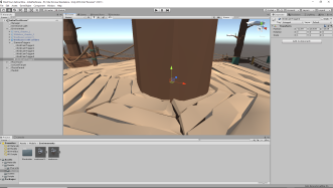

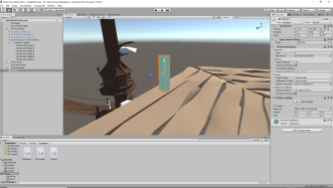

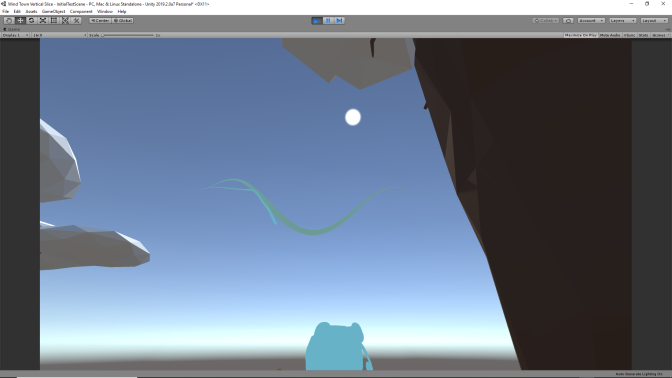

After I had dinner, though I was still numb and extremely unhappy, I forced myself to work through it. I placed a number of empty game objects in the centres of platforms to act as triggers for turning the camera when the player gets within a certain proximity, and programmed a script to set the current camera position index based on which trigger the player is closest to. Then, after watching the first PlayStation State of Play video, I looked back over the code I’d written earlier for transitioning between camera offset modifier values (to multiply this modifier by the offset vector itself), and then added this offset to the camera’s position calculation. I then spent some time tweaking camera values at specific points in the environment to keep the camera from clipping through objects, and moved the flautist to prevent the nearby tree from obstructing the planned distant wave interaction. Next, I placed a few blank cubes in the distance to determine the position values for the waves (when properly integrating them in world space), and once I’d entered the values I came up with in the code, I was sure to set the line renderers to use world space instead of local space. I then kept tweaking and testing various wave positions until I felt that I’d hit good enough of a sweet spot, and I also had to adapt various variable values to fit the new size of the wave. While doing this, I saw that I was still factoring-in the wave size modifier for the player’s trail displacement and not the character’s, so I removed that, which made the trails properly synchronised again. I then used Photoshop to get the RGB values for the green of the flautist’s cloak from Ella’s turnaround sheet, so that I could change the colour of the character’s wave to match it (and I had to change the midpoint colour to reflect this). Then, after some more small tweaks to get things looking nicer, I pushed the changes to GitKraken and headed to bed.

On Tuesday morning, I went to the computer room and eventually imported Unity’s Post Processing Stack from the Asset Store, as I wanted to briefly piddle about with depth of field (to make screenshots and footage look more appealing). While the import came with an error that was preventing the game from running, I found a simple fix online, which meant adding one line of code to the top of the problematic script. When we were moved over to the studio (due to the computer room being triple-booked), Adam came and spoke to me about the project, giving me three requests for things to change from Monday’s test build. He wanted an immediate sense of visual feedback for making contact with the character’s wave (to make it clear that that’s the right thing to do), he wanted to have a kind of aim assist for when the player is relatively close to the character’s wave, and he wanted the reversed synchronisation interaction be long enough to be satisfying (which it was in the easier build). After speaking to Adam, I spent some time tweaking some values, such that the character’s trail would properly move on a sine wave upon reaching the third part of the interaction (before it transitions into following the player’s trail properly). I found that the speed of the sine wave’s counter increase was initially too slow, as was the rate at which the character initially lost synchronisation (so the synchronisation level wasn’t resetting to zero), so I fixed these such that the interaction was working as intended. At this point, Adam had me make a build of the game so that I could test it with a few people in Studio 8.

Three people tested the game at that time (after we changed the TV’s settings to reduce input latency), being two first year students (I mistakenly didn’t ask for their names) and Chris O’Connor. While they gave me a decent amount of feedback while playing, I’ll only summarise a few key areas here. They praised the relaxing nature of the game, the cuteness of Gloria’s character design and how it’s a nice environment in which to move around. They said that the movement is sensitive, and it’s good that it takes some work to get used to, as if it were too easy, it wouldn’t be satisfying. They suggested that the inability to manually turn around objects to see Gloria past obstructions was frustrating, and said that it’d be good to have the camera automatically turn to see around obstructive objects, to have a silhouette of Gloria appear through obstructive objects or for the obstructions themselves become translucent. They said that it’s difficult to see the waves against the sky at the moment (which is a known, temporary issue due to the placeholder skybox), but they also suggested that the game could be made more accessible for colour blind players by having different colour options for different forms of colour blindness, and to only have this selection change the key interactions rather than the whole world and visual composition.

When I got back to the studio, I showed Ella and Bernie the changes I’d made overnight, and Bernie told me that the camera should be facing inwards rather than constantly outwards, so that the player can see the forest and the environment that was made. Bernie then gave me a rundown of the feedback he’d noted from Monday’s testing session, and James chimed in with some ideas. The feedback reinforced the notion that the wave mechanic needs more of a visual indication that the player is doing the right thing when following the character’s wave, especially since, as Ella pointed out, it takes longer to reach full synchronisation than it used to in earlier prototypes. People also thought that the synchronisation had ended as soon as the roles had reversed, and were thus doing nothing, though I didn’t have this problem when I tested the game, assumedly because I’d made it so that the character properly moves on a sine wave before following the player (to encourage more movement). Bernie suggested that the reversal could me made more obvious by having the character visually ping once the character takes the lead, mirroring how the player pinged to start the interaction, and James suggested that there could be an active pause between the role reversal (although I said that this would require a rewrite of the code for the interaction, and so wouldn’t be feasible). Bernie said that the lines could change direction as a cue to reverse roles, which I believe would be possible to do, and James suggested that a UI indicator (possibly with tilting holding hands, with the tilt dependent on the synchronisation level) could imply a change in who’s leading, though Bernie said that having a UI element could detract from the experience. Some of the feedback suggested that people were more satisfied with the more difficult version, feeling that the challenge of learning was satisfying, and the easiness made the game feel too forgiving (since it could be completed while still making mistakes). There were also a few bits of feedback for controls, with some players feeling like the stick’s input was divided into three areas (the top, middle and bottom) with its sensitivity (which could be down to the physical resistance on the stick, or, if they were moving it radially, the smaller difference in vertical motion when rotating the stick around its top and bottom), and someone pointing out that it feels like there are certain vertical points that can’t be reached (which I’d say is down to the lerping of the player’s vertical position). Sid also felt that the input felt generally weird and different to before, but when Bernie asked him to try the prototype again and tell us what was wrong, we found out that the issues were down to a combination of a faulty controller and performance hiccups on Bernie’s laptop. After Bernie had given me all of the feedback he’d received, I told him the feedback I’d been given in the morning.

After this, Adam gathered the team together to have a meeting in Studio 8, where we discussed schedules and roles for the team up to, during and after the Easter break. Adam started by acknowledging that we’re running out of time, but told us that what we want is a whole experience, not just a level, so we need to be cutting on quality if we’re taking a hit to anything. He suggested that Ella take over the role of handling user testing, so that we can reach the intended emotional experience and story. He also suggested that our processes are still too linear (“waterfall”), and need to be more iterative, though we need to be able to just get on with what we’re working on without checking every detail over with the team. One big thing that Adam suggested was that we need to focus on the characters over the environments, as the characters are key to the experience. However, he wants to be able to walk through all of the environments as soon as possible, so he suggested that we task Bernie with creating white boxes for what remains. Adam said that the most important thing is that I get the synchronisation interactions in, with everything else being extraneous (such as the suggestions for adjusting how the camera works). In terms of aesthetics, Adam said that we should prioritise having Gloria visually play her violin and animating the characters in general, as they’re what get across the game’s emotions. In terms of areas, Adam said that since the string area and the tower are the most important (being the first and last, respectively), they should be the most polished and the best-feeling. Then, after suggesting how we arrange GitKraken branches, he determined that we need to come up with the piano mechanic, as well as how the other character’s join in (at least in a visual way). Below are photos of what Adam wrote on the whiteboard.

After the group discussion, Adam brought me over to his office to test the process of bringing together GitKraken branches. While we went through the process, he told me that we need to determine a Unity version to stick to, and that branches should focus on small feature additions, being pulled from and pushed to frequently. The idea, however, is that I work on the master branch, and the others work on separate branches from that. After that, we returned to the studio, and Adam showed Bernie how to use GitKraken, as well as having me pull the changes that Bernie requested to make (though having multiple simultaneous pull requests led to errors, and none of the actual changes were saved). After doing this, I headed home.

A while after dinner, I came up with and programmed the aim assist system that I’d wanted to sort out earlier in the day. I then changed a couple of lines of code to factor-in the aim assist, and set some initial values for testing it out. After testing it, I realised that I was calculating the aim assist value incorrectly, and that I should have inverted one of the sections, so I did that. I also added the condition for the wave to be in range for the assist to kick-in, so that it doesn’t affect the player’s movement before wave is reached or after the roles have been reversed. Then, I spent some time tweaking the aim assist’s values to make it feel alright, but it just felt overly intrusive and snappy. This was until I made it lerp towards its target value, which made it feel far less commanding. I then increased the acceleration rate of the player’s physical movement when interacting, so that the player would come to a stop more quickly when cuing the reaction (due to what happened to one of the players in Monday’s testing session). Adam also sent me some code to add controller support when in the editor on a Mac, so I read through it such that I understood it, and then rewrote it in my script for triggering interactions. After pushing all of the changes to GitKraken, I headed to bed.

When I went to the studio on Wednesday morning, I spent a chunk of time watching parts of Apple’s recent Keynote. Afterwards, Adam came into the studio and showed me that I could have conditions for which inputs I use in an actual build of the project dependent on platform, so I quickly programmed what he showed me for triggering interactions. We then spoke a bit about possible ways in which to handle storing and sharing builds for different platforms via GitKraken, until he left. After this, I programmed a subroutine to set the camera’s lateral offset when interacting, being the lateral midpoint between Gloria and the flautist. I then added separate inputs to the project for the right stick for Windows and Mac, and programmed the calls for the right stick to switch between these inputs based on the current platform.

After lunch, I eventually added some code to set Gloria’s rotation at an angle to the flautist when interacting with him. I then had Ella and Bernie test the wave mechanic with aim assist (without saying about it), and they both said that it felt good and easier to play. Then, I programmed the flautist to rotate in the same way as Gloria during the wave interaction, and I edited a couple of Bernie’s images for him to upload to social media. After that, I headed home, and after dinner I planned-out and booked my travel for the EGX Rezzed trip the following Friday. Then, before heading to bed, I spent a good while writing about my experience with the course and editing what I’d written (before posting it to the shared document we were told to post it to).

On Thursday morning, I went to the studio and eventually thought about how I was going to program the “ping system”. After a fair amount of thought, I added some code to make the player rotate to face whomever they’re pinging with. I also tweaked the code for finding the directional vectors between the player and the flautist to account for the vectors being two-dimensional, only finding distances on the x and z axes. I then programmed a subroutine in a new script to determine whether a character should be “pinging” with the player, and to change whom the player is facing based on which character is closest. After this, I headed to my First Support appointment.

After the appointment and lunch, I sent the synchronisation colours to Sid for him to use for the ping system particles, but he felt that they were too similar. After he had Ella and me look at the colours, I agreed with Ella to pull the flautist’s green from the key art’s rainbow instead of the flautist’s cloak. Therefore, in Photoshop, I got the RGB values of the green and calculated the new midpoint’s values, before sending all of this to Sid. After that, I wrote a subroutine to set the rotation of NPCs based on whether they’re pinging, and then Sid called Ella and me over to look at the particle system and give some feedback. Next, I added two boxes to the scene with the pinging script attached to test whether it was working, and while they were turning correctly, the player was only turning on a delay. I noticed that the number that represents the number of characters responding to the player’s ping wasn’t decreasing from 2, so I looked back over the code and noticed that I wasn’t setting Booleans such that the ping number could be reduced. Setting the Booleans properly fixed the issue, but there was still a bit of delay in the player turning due to not slowing down quickly enough when moving before plucking the violin. I made the player decelerate more quickly if pinging with a character and the analogue stick in use is within the deadzone, and I also slightly widened said deadzone. When I showed Bernie the functioning ping system, he said that players would mash the pluck button and make the characters freak out, so I offered to put a cooldown on the player’s ability to play the violin. Bernie then sent me a version of the wind area building he’d modelled with materials that he’d made unlit in Cinema 4D, to see whether the materials would remain unlit when imported into Unity (to save time when implementing them), but this didn’t work. After this, I headed home.

After having my dinner, I programmed a subroutine to set when the player is bowing their violin (by holding down what would otherwise just be the pluck button during world traversal). I then added conditions to other scripts to make the bowing visibly act like the plucking, and while it was mostly working, the value that tracks the closest proximity of a pinging character wasn’t being reset. This meant that the player would always look at whichever character they’d got closest to while bowing at any point, rather than the one they’re currently closest to. After some piddling about, I fixed this by having the closest proximity value reset at the end of each frame (after the player’s rotation has been set accordingly). Then, I programmed it such that the flautist sets his rotation to respond to the player’s plucking or bowing after the wave synchronisation has happened, using the new ping behaviour script as a reference. After I pushed the changes to GitKraken, I went to bed.

On Friday morning, I went to the studio and spent some time working on my business card design. When George came in, I showed him the ping system and had him try the aim assisted wave mechanic, and he said that since it’s easier now, the interaction can be longer. Adam then came and spoke to the class about what to get done over Easter to promote ourselves and the show, how to go about planning our EGX Rezzed schedules and why we should consider visiting Now Play This. Once Adam was done, I briefly spoke to George and Ella about what we were planning on working on, and we determined that George and I would focus on designing the piano mechanic together while Ella worked on refining the symbols for the ping system. After that brief discussion, I programmed the subroutine to start a cooldown timer when the player stops bowing or plucking, and added the requirement for the cooldown timer’s value to be at a certain point in order to trigger the plucking or bowing again. Then, with Bernie’s feedback (after he tried the cooldown), I doubled the length of the cooldown.

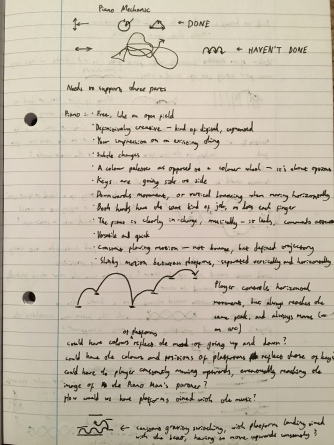

Sid then showed Ella and me the ping system’s particles (they looked really nice), how he was making them work and how I could adapt them in code if needed. I then thought about and took more notes on the brass area’s ball mechanic. After this, George and I spent a chunk of time designing the piano mechanic, first determining which kinds of motions we’d done with the prior mechanics and which we hadn’t yet. We then laid-out what we think of when we think of pianos, and decided upon a constant, flowing, bouncing motion, resembling that of a Slinky (due to the graceful motion of hands across a piano, and the way the fingers bounce between keys). Initially, George suggested that the player moves between floating platforms of varying vertical and horizontal positions, and I said that they could always move on an arc that reaches the same peak. When George said that the colours of the platforms could suggest positive and negative emotions as they move up and down, I suggested that the vertical positions and colours of the platforms could reflect the keys of a piano. Then, I wondered whether the interaction could have the player moving constantly upwards, eventually reaching an image of the Piano Man’s partner. However, a big concern was how we could have the platforms feasibly timed with the music, as having different times to land based on vertical position would make the player lose synchronisation with the beat. I felt that one way of keeping with the beat would be to have the direction of gravity switch in time with the beat, with the player having to ascend by falling onto higher platforms, so that they’d fall upwards from a greater height and reach higher peaks. However, we felt that we were losing focus, so we went back to the basics of requiring adherence to the three-part structure, synchronisation with the Piano Man and immediately intuitive gameplay. When George suggested that we could have a mechanic that involves Gloria bouncing once for every four bounces of the Piano Man (due to the violin’s tendency to sustain notes when supporting, and the piano’s tendency to deliver them more quickly), I said that the player could have to land on the same space as the Piano Man, ascending to the next level upon bouncing if they do. George suggested having the player’s and the Piano Man’s motions mirrored, possibly switching over at times, and I said that there’d need to be a safety net beneath or around platforms to avoid too much difficulty or tedium. I then suggested that the role reversal could feature no platforms, instead having the player and the Piano Man move in a double helix motion, with the Piano Man trying the match the player’s horizontal position. After this, thinking about the need for the bounces to sync-up, we came up with the idea that we settled on: the Piano Man’s line is bouncing on a circle of black and white keys around the bottom of the tower, and the player’s in bouncing in the opposite direction on a parallel circle of keys. Each time the player lands on the same key as the Piano Man, the two circles get closer (though they slowly, gradually move apart from each other), and when they’re close enough, they become one line. Then, this line is broken with one final bounce, and the Piano Man begins to try to match the player’s horizontal movements as the two move in a double helix motion. Eventually, the lines turn and fall upwards, up the tower, and the other characters’ lines join in. And when the two lines reach the top of the tower, they reveal everyone there at the top, playing together. Below are my notes from the session with George.

Right as we had the breakthrough, Adam came and asked George and me about the piano mechanic. When we explained it to him, he said that he liked it, and told me to get to working on that before anything else, working on the brass area’s ball mechanic if I need a break. When I explained the idea to Ella, she said it sounded good. After this, I started feeling unhappy, and wasn’t able to think straight for a while. Sam sent over some music samples, and when a few of us listened to them, they all sounded really good. At this point, I headed home. After having dinner, I recorded and edited some footage of the wind area’s build working, and I uploaded the video to social media. I then spent a fair amount of time continuing to work on my business card designs, before ordering the cards and heading to bed.

On Saturday, I packed my things and got ready to head back to Maidstone, where I’ll be for around a week. After meeting with my dad and brother, we got lunch and headed there. I then proceeded to spent the afternoon unpacking and trying to get comfortable, though I was feeling quite unhappy. That brings me to the evening, when I started writing this blog post, doing so until I headed to bed. I then proceeded to spend most of Sunday working on this post, feeling very unhappy while doing so. Over the coming week, I know I’ll be meeting some friends over a couple of days, but I intend for a decent amount of time to be spent programming the piano mechanic that we designed. Also, on Friday, I’ll be going to EGX Rezzed, so I’ll see what I can learn from that experience. I’m sure it’ll be good, anyway. Regardless of what happens, I’ll see how everything goes in next week’s post.